Cloud Management Software (CMS) provides high-level abstraction and virtualization of the underlying computing, networking and storage resources, to make their provisioning flexible, dynamic, and faster. Unfortunately, the mapping of virtual resources to physical servers and devices is not a trivial task; for this reason, CMS often implements very simple algorithms, leading to inefficient usage of the infrastructure.

Many research papers have already investigated allocation strategies to achieve the best usage of the physical resources under different objectives: constraint and Quality of Service (QoS) satisfaction, energy efficiency, security, cooling efficiency and so on[1],[2],[3]. The application of such theoretical frameworks in practice requires the availability of suitable APIs for controlling the operation of the CMS. This is largely possible for computing aspects, since most CMS already provides “migration” functions to move Virtual Machines (VMs) between physical servers (often used to shut down servers for maintenance); however, a similar feature is not available for networking.

Virtual networks created by cloud tenants are usually implemented by different tunneling techniques (e.g., Virtual LANs, IP GRE) over a legacy communication infrastructure with a plain (i.e., layer 2 only) or hierarchical (IP routing) organization. Tunneling provides the ability to deliver packets between any couples of VMs, independently of the underlying network topology and organization, by relying on the native forwarding and switching capabilities of protocols like Ethernet and IP. This approach results in a reliable and fault-tolerant solution, but lacks the ability to provide service guarantee, security, isolation, and efficient bandwidth utilization.

To tackle in an effective way the novel challenging software development paradigms that entail some forms of application-defined networking, we need a tighter control of networking. In this respect, CMS should be extended with an additional API, which allows programmatic control of the communication infrastructure. The main challenge in this context is the proper balance of performance and constraints set by the applications with the management policies and objectives of the infrastructure owners.

The design of Highly-Distributed Applications (HDAs) is and will be increasingly demanding for reliable, dependable, and trustworthy communication with tight performance constraints. They will require tight bounds on bandwidth, latency, jitter, availability, resilience, security, and traffic isolation. On the other hand, infrastructure owners aim at exploiting the full switching capacity of their network devices and the full bandwidth of their links. Traffic engineering is the only viable solution in very high-density installations like the data centers, to avoid the technical and economical burden of heavy overprovisioning. In this context, Software Defined Networking (SDN) entails a tighter and more flexible control of the networking infrastructure, enabling novel management paradigms and cost-effective operation well beyond than allowed by legacy distributed routing and spanning tree protocols.

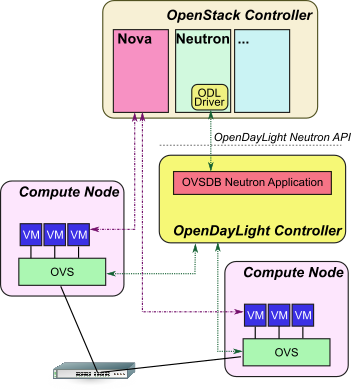

Despite the unprecedented opportunities and the large interest around SDN technologies, their application in CMS is currently largely unexplored. Similarly to what already happens for computing and storage resources, we need a mechanism to match virtual resources with the physical infrastructure. OpenStack, the largest open-source community for CMS, currently includes an SDN mechanism driver. The driver uses an SDN controller (OpenDayLight) to configure Open vSwitch (OvS) instances in the physical servers, but does not provide any control over the networking infrastructure (see Figure 1). In the framework depicted in Figure 1, OpenStack Nova creates the VMs (purple arrows), while OpenStack Neutron connects them to their tenant virtual networks by mean of the OpenDayLight controller (green arrows); however, packet forwarding between OVS still relies on tunneling techniques.

Figure 1. OpenStack integration with OpenDayLight for configuration of OpenVSwitch instances in Compute Nodes.

To align the current OpenStack/SDN framework with upcoming requirements for HDAs, all physical networking devices should be brought together with software switches under the control of the SDN controller, and a high-level business logic should define networking strategies. In this vision, the SDN controller is responsible for binding VMs with their network flows, whereas the business logic makes traffic engineering by defining network partitions and paths according to the tenants’ requirements and the owner’s policies (Figure 2).The business logic should be part of an orchestrator, which deploys HDAs according to the execution requirements and infrastructure policies. The framework depicted in Figure 2 shows that the OpenDayLight controller should also set packet flows throughout the physical network, e.g., by mean of OpenFlow, based on high-level policies set by the business logic.

Figure 2. OpenStack/OpenDayLight framework to control the physical network by a SDN controller. Red lines shown the missing part in current software.

We are going to implement the above envisioned scenario in the ARCADIA framework. In ARCADIA, the Smart Controller automatically parses applications to extract policies and context information, and then configure the execution environment accordingly. The interface to the OpenDayLight controller enables the Smart Controller to set QoS requirements for specific applications, to “spread” traffic flows over the entire networking infrastructure, to provide backup paths and to quickly restore from links or hardware failures. We believe such approach would be of great interest for any other researchers in this field, and we plan to make our open-source software available to everyone for additional extensions and applications.

[1] H. Shirayanagi, H. Yamada, and K. Kono, “Honeyguide: A VM migration-aware network topology for saving energy consumption in data center networks,” in IEEE Symposium on Computers and Commu- nications (ISCC), Cappadocia, Turkey, Jul. 1–4, 2012, pp. 460–467.

[2] W. Fang, X. Liang, S. Li, L. Chiaraviglio, and N. Xiong, “VMPlanner: Optimizing virtual machine placement and traffic flow routing to reduce network power costs in cloud data centers,” Computer Networks, vol. 57, no. 1, pp. 179–196, January 2013.

[3] L. Wang, F. Zhang, A. V. Vasilakos, C. Hou, and Z. Liu, “Joint virtual machine assignment and traffic engineering for green data center networks,” ACM SIGMETRICS Performance Evaluation Review, vol. 41, no. 3, pp. 107–112, December 2013.